recognition, and analysis of behavior. It is hard to

develop robust computer vision techniques that require minimal, or

none, human intervention for a large set of possible inputs.

recognition, and analysis of behavior. It is hard to

develop robust computer vision techniques that require minimal, or

none, human intervention for a large set of possible inputs.

Active Research,

|

|

My research interests lie in the analysis of complex data for real problems, leveraging my background in computer vision, computer graphics, and machine learning. Recently, one of the application domains I have been working is computational forensics and its analysis of trace evidence.

For years computer vision researchers have been working on the

problem of tracking objects and features across video footage.

Recently, tracking of human faces has acquired an even more active role

in the field, being a necessary step for higher level applications such

as surveillance,

recognition, and analysis of behavior. It is hard to

develop robust computer vision techniques that require minimal, or

none, human intervention for a large set of possible inputs.

recognition, and analysis of behavior. It is hard to

develop robust computer vision techniques that require minimal, or

none, human intervention for a large set of possible inputs.

Tracking involves the proper modeling of the problem and its

dynamics, a principled design of the uncertainty representation, and an

accurate

way to measure an indirect measurement of pattern's occurrence

over time, also with an uncertainty.

way to measure an indirect measurement of pattern's occurrence

over time, also with an uncertainty.

When all these steps are taken into account, tracking is an instance of a Bayesian filter, such as the Kalman filter or the Particle filter. Two essential steps are often overlooked – the proper uncertainty modeling of the tracking state, and the proper uncertainty modeling of the observation that will propel the Bayesian Filter, both areas I have worked on during my PhD, and then after, with my students.

We developed tracking techniques for deformable models (this, this, this, and this), and for team sports (this and this). We also have a gentle introduction to Bayesian Filters.

Deformable models are a powerful tool in computer graphics and

computer vision and can be used for modeling

(this, this, this, and this) and tracking (this, this, this, and this).

We explored deformable models to represent, for example, faces and hands. Their modeling, rendering, and tracking can be used in several activities on HCI, and also for interaction and understanding of people with disabilities.

Proper

computer tools can have an incredible impact in the quality of life of

those with different disabilities. Sometimes these tools are

simple concepts, but many possible applications push the frontier of

our knowledge, and require significant research.

Proper

computer tools can have an incredible impact in the quality of life of

those with different disabilities. Sometimes these tools are

simple concepts, but many possible applications push the frontier of

our knowledge, and require significant research.

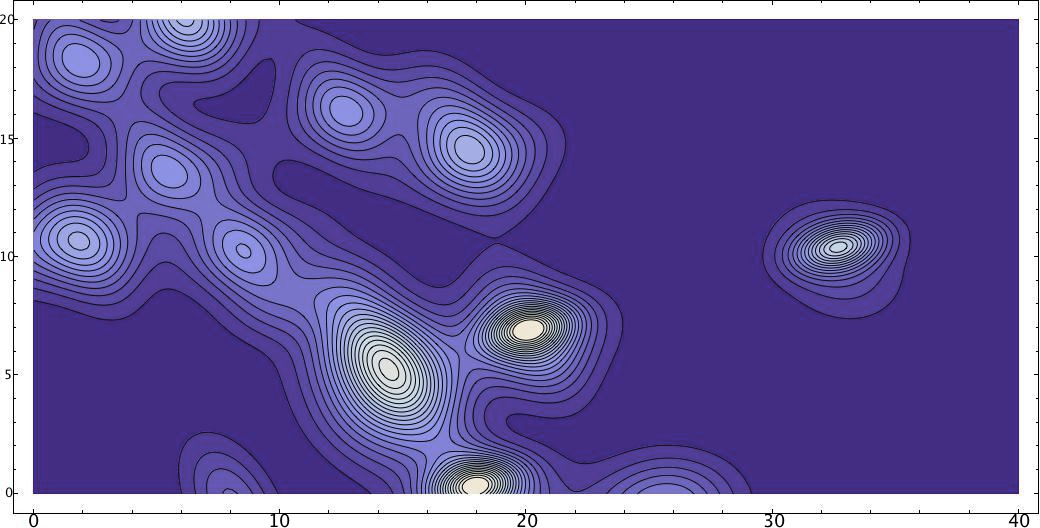

Computational forensics is an intrinsically multidisciplinary area that requires several fields of computer science and engineering (such as computer graphics, computer vision, signal processing) as well as statistics – it interacts directly with other fields of science as well, to help the understanding and authentication of different phenomena.

In the field of digital media, We have published a survey in the subject of forensic analysis of digital objects, as well as performed a forensic analysis for the Brazilian president as a consultant. We also developed a new image descriptor capable of detecting certain types of steganography and performing scene categorization.

My interests in

computational forensics go beyond the analysis of

digital artifacts. I have worked on 3D reconstruction for

evidence analysis (this

and this),

shredded document

recovery (this and

this), and

super-resolution for image enhancement.

My interests in

computational forensics go beyond the analysis of

digital artifacts. I have worked on 3D reconstruction for

evidence analysis (this

and this),

shredded document

recovery (this and

this), and

super-resolution for image enhancement.

We have done a lot on media phylogeny, but this subject gets a section of its own.

With social networks and the ubiquitous availability of the

internet, digital content

is widespread and easily redistributable, either lawfully or

unlawfully.

Images and other digital content can also mutate as they spread out.

For example, after images are posted on the internet, other users

can copy, resize and/or re-encode them and prior to reposting,

generating similar but not identical copies. While it is

straightforward to detect exact image duplicates, this is not the

case for slightly modified versions.

Several researchers have successfully focused on the design and

deployment of

near-duplicate detection and recognition systems to identify the

cohabiting versions of a given document in the wild. But only recently

we started to go

beyond the detection of near-duplicates, and to look for the structure

of

evolution within a set of images (or medias in general).

We have pioneered the area of media phylogeny, and even named the problem. We then extended the approach to video information, forests (this, this, and this), large datasets, optimum branching, multiple parent structures, and studied the dissimilarities and distance concepts involved in the problem itself.

In machine learning, our core results include a Bayesian approach to combine binary classifiers into a multiclass classifier, an image descriptor capable of capturing information about steganography and scene description simultaneously, a powerful method of building graph structures from data, and a bag-of-words framework to perform classification and retrieval in graphs without using graph-matching algorithms. We also have interesting results in computer graphics, performing clustering on 3D meshes.

On the

application side, we used learning to classify fruits and

vegetables from images, which also provided us with a patent.

Our group also investigates machine learning and computer vision

applied to diabetic retinopathy,

the leading cause of blindness for the economically active population

and responsible for 5% of all blindness cases in the World. Our

approach is scalable to multiple causes and populations, and performs

well even on cross-dataset evaluations (this, this, and this).

Our group also investigates machine learning and computer vision

applied to diabetic retinopathy,

the leading cause of blindness for the economically active population

and responsible for 5% of all blindness cases in the World. Our

approach is scalable to multiple causes and populations, and performs

well even on cross-dataset evaluations (this, this, and this).

During a grant with the

Brazilian Revenue Service, we worked on

fraud-ranking methods to select which international commerce orders

should be inspected upon entrance/exit of the country. Only a small

subset of results was published( this, this, and this) due to

Brazilian fiscal secrecy laws.

During a grant with the

Brazilian Revenue Service, we worked on

fraud-ranking methods to select which international commerce orders

should be inspected upon entrance/exit of the country. Only a small

subset of results was published( this, this, and this) due to

Brazilian fiscal secrecy laws.

Recently, we used CVs and collaboration patterns to study publication patterns in different areas of knowledge (this and this. We have initial results in building graph structures from data, and have grouping results applied to meshes and media phylogeny.

It is very hard to make agents and computer procedures intelligent (it is after all a whole field by itself!), but in many areas the restriction and conditioning of what "intelligent" means can turn it in a easier and more tractable problem.

In many applications of Computer Graphics, such as virtual reality, games, and simulations, the computer has to control agents that will coexist, and perhaps even interact, with user controlled agents. Although for humans it is natural to be aware the surrounding environment and react accordingly, it is a whole different story on the computer side.

In our work we automate reactive behaviors for a computer guided agent. Tasks such as collision avoidance of moving obstacles and reaching an also moving target are achieved in a parameterized way, allowing each agent to be unique.

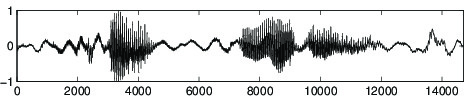

Additionally, we have explored signal processing and wavelets to manipulate and analyze motion capture data.

There are many interesting and challenging problems related to

sound and speech manipulation. Recently I have been working with

Audio 3D, in the context of accessibility for the Blind and

Low-Vision, as part of our Vision for the Blind project. We

have interesting results in the personalization of the HRTF functions

using isomap and a neural network regressor.

In the past (look at my MSc thesis)

I've studied the characteristics and properties of transformations of

audio signals, using both time and frequency representations. One nice

result is the use of the Local Cosine Transform to accomplish time warping of audio signals

avoiding large frequency distortions. We then used this methods to

motion capture processing and analysis.

In the past years wavelets and filter banks have established themselves as a very powerful set of tools for processing, analysis and manipulation of the most various types of signals.

In Computer Graphics wavelets are an important tool for several different applications, such as surface modeling and representation, texture analysis and synthesis, radiosity, and rendering.

Check our book for a little bit more about it.