Next: Unsupervised classification by OPF

Up: Supervised classification by OPF

Previous: Supervised classification by OPF

Accuracy measure

The accuracy of a classifier can be measured in any set: training,

evaluation, and test. Let  be any one of these sets and

be any one of these sets and  be the

number of samples in

be the

number of samples in  . The accuracy

. The accuracy  is measured by taking

into account that the classes may have different sizes in

is measured by taking

into account that the classes may have different sizes in  . If

there are two classes, for example, with very different sizes and a

classifier always assigns the label of the largest class, its accuracy

will fall drastically due to the high error rate on the smallest

class.

. If

there are two classes, for example, with very different sizes and a

classifier always assigns the label of the largest class, its accuracy

will fall drastically due to the high error rate on the smallest

class.

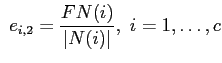

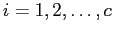

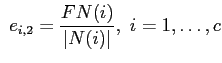

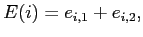

Let  ,

,

, be the number of samples in

, be the number of samples in  from each class

from each class  . We define

. We define

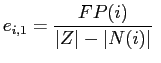

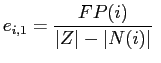

and and  |

(1) |

where  and

and  are the false positives and false negatives,

respectively. That is,

are the false positives and false negatives,

respectively. That is,  is the number of samples from other

classes that were classified as being from the class

is the number of samples from other

classes that were classified as being from the class  in

in  ,

and

,

and  is the number of samples from the class

is the number of samples from the class  that were

incorrectly classified as being from other classes in

that were

incorrectly classified as being from other classes in  . The

errors

. The

errors  and

and  are used to define

are used to define

|

(2) |

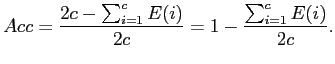

where  is the partial sum error of class

is the partial sum error of class  . Finally, the

accuracy

. Finally, the

accuracy  of the classification is written as

of the classification is written as

|

(3) |

Next: Unsupervised classification by OPF

Up: Supervised classification by OPF

Previous: Supervised classification by OPF

Joao Paulo Papa

2009-09-30

![]() be any one of these sets and

be any one of these sets and ![]() be the

number of samples in

be the

number of samples in ![]() . The accuracy

. The accuracy ![]() is measured by taking

into account that the classes may have different sizes in

is measured by taking

into account that the classes may have different sizes in ![]() . If

there are two classes, for example, with very different sizes and a

classifier always assigns the label of the largest class, its accuracy

will fall drastically due to the high error rate on the smallest

class.

. If

there are two classes, for example, with very different sizes and a

classifier always assigns the label of the largest class, its accuracy

will fall drastically due to the high error rate on the smallest

class.

![]() ,

,

![]() , be the number of samples in

, be the number of samples in ![]() from each class

from each class ![]() . We define

. We define

and

and